A database management system (DBMS) is a software application that allows users to efficiently store, manage, and manipulate vast amounts of data. It acts as an intermediary between users and the database, providing a structured and organized approach to data storage and retrieval. A DBMS offers several key features, including data definition, data manipulation, and data control.

In data definition, a DBMS provides tools for creating and modifying the structure of a database, specifying the data types, relationships, and constraints. This allows users to define the schema, or the logical and physical organization of the data. In data manipulation, a DBMS offers a wide range of operations to insert, retrieve, update, and delete data from the database. Users can execute complex queries and transactions to extract meaningful information from the stored data. Data control ensures data integrity, security, and concurrency by managing user access rights, enforcing data validation rules, and providing mechanisms for data backup and recovery.

A DBMS offers numerous advantages over traditional file-based systems. It provides a centralized and shared data repository, eliminating data redundancy and inconsistency. This promotes data integrity and reduces data maintenance efforts. Additionally, a DBMS supports concurrent access, allowing multiple users to access and manipulate the database simultaneously without conflicts. It provides a high level of data security by enforcing user authentication and authorization, ensuring that only authorized individuals can access the data. Furthermore, a DBMS offers scalability and performance optimizations, enabling efficient handling of large datasets and complex queries. Overall, a DBMS plays a critical role in modern data management by providing a robust, efficient, and secure platform for storing and manipulating data.

History of DBMS

The history of database management systems (DBMS) can be traced back to the 1960s when the concept of data management emerged as a response to the increasing need for efficient storage and retrieval of large volumes of data. During this time, hierarchical and network models were introduced as early DBMS prototypes. The hierarchical model organized data in a tree-like structure, with parent-child relationships, while the network model represented data as interconnected records. These early models laid the foundation for subsequent developments in the field.

In the 1970s, the relational model, proposed by Edgar F. Codd, revolutionized the field of DBMS. The relational model introduced the concept of tables, where data was organized into rows and columns, and relationships between tables were established using primary and foreign keys. This model offered a simple and flexible way to represent data and allowed for powerful query operations using structured query language (SQL).

The 1980s witnessed the rise of commercial DBMS products, such as Oracle, IBM DB2, and Microsoft SQL Server. These systems provided robust transaction management, concurrency control, and data integrity mechanisms. In the 1990s, object-oriented DBMS (OODBMS) emerged, which combined the features of DBMS with object-oriented programming concepts. OODBMS aimed to handle complex data types and support the storage of objects directly in the database.

With the advent of the internet and the proliferation of web applications in the late 1990s and early 2000s, there was a need for DBMS that could handle the massive amounts of unstructured data generated by these applications. This led to the development of NoSQL databases, which focused on scalability, high availability, and flexible data models. NoSQL databases, such as MongoDB and Cassandra, gained popularity for their ability to handle big data and real-time data processing.

Today, DBMS continues to evolve with advancements in technology. Cloud-based DBMS, in-memory databases, and distributed databases are some of the recent trends in the field. These developments have allowed for greater scalability, performance, and ease of use, enabling organizations to effectively manage and analyze vast amounts of data.

| Decade |

Milestones |

| 1960s |

Introduction of hierarchical and network models |

| 1970s |

Introduction of the relational model and SQL |

| 1980s |

Commercialization of DBMS products (Oracle, DB2, SQL Server) |

| 1990s |

Emergence of object-oriented DBMS (OODBMS) |

| Late 1990s and early 2000s |

Rise of NoSQL databases for handling unstructured data |

| Present |

Evolution of DBMS with cloud-based, in-memory, and distributed databases |

Characteristics of Database Management System

Data Independence:

DBMS provides a layer of abstraction between the physical representation of data and the applications that use it. This allows changes to the database structure without affecting the applications that access the data. There are two types of data independence: logical independence (ability to modify the logical schema without affecting the external schema) and physical independence (ability to modify the physical schema without affecting the logical schema).

Data Integrity:

DBMS enforces integrity constraints to ensure the accuracy and consistency of data. It enforces entity integrity (primary key constraints), referential integrity (foreign key constraints), domain integrity (data type and range constraints), and user-defined integrity rules. This prevents invalid or inconsistent data from entering the database.

Data Security:

DBMS provides mechanisms to control access to the database and protect the data from unauthorized access, modification, or destruction. It offers user authentication and authorization, allowing administrators to define user roles and privileges. Access control is enforced at the level of tables, views, and individual data items. DBMS also supports data encryption and auditing to ensure data privacy and track database activities.

Data Concurrency:

DBMS allows multiple users to access the database concurrently without data conflicts. It manages concurrent access through techniques like locking and transaction isolation levels. Locking mechanisms ensure that only one user can modify a piece of data at a time, while isolation levels define the visibility of changes made by one transaction to other concurrent transactions. This ensures data consistency and prevents data anomalies.

Data Recovery and Backup:

DBMS provides mechanisms for data recovery in case of system failures, crashes, or human errors. It maintains transaction logs and uses techniques like write-ahead logging and checkpoints to ensure durability of committed transactions. DBMS also supports backup and restore operations to safeguard data against disasters and allows for point-in-time recovery.

Query Optimization and Performance:

DBMS optimizes query execution by evaluating various query plans and selecting the most efficient one. It uses techniques like indexing, query rewriting, and caching to improve query performance. DBMS also provides tools for monitoring and tuning the database system to optimize performance based on workload and resource utilization.

Scalability and Extensibility:

DBMS is designed to handle growing amounts of data and users. It supports scaling up (vertical scaling) by upgrading hardware resources and scaling out (horizontal scaling) by adding more servers to distribute the workload. DBMS also allows for adding new data types, modifying the schema, and incorporating new features without disrupting the existing applications.

Data Integration and Sharing:

DBMS enables integration and sharing of data across multiple applications and users. It supports data modeling techniques like normalization and denormalization to eliminate data redundancy and ensure data consistency. DBMS provides features like views, stored procedures, and triggers to encapsulate complex logic and facilitate data sharing and integration among different applications.

Popular DBMS Software

- Oracle Database

- Microsoft SQL Server

- MySQL

- PostgreSQL

- IBM DB2

- MongoDB (NoSQL database)

- Cassandra (NoSQL database)

- Redis (in-memory data store)

- SQLite (lightweight embedded database)

- MariaDB (MySQL-compatible open-source database)

Types of DBMS

There are several types of DBMS based on their data models and architectural designs. Here are some common types of DBMS:

Relational DBMS (RDBMS):

This type of DBMS uses the relational data model, where data is organized into tables with rows and columns. It supports SQL for data manipulation and retrieval. Examples include Oracle Database, Microsoft SQL Server, MySQL, and PostgreSQL.

Object-Oriented DBMS (OODBMS):

OODBMS stores data as objects, which encapsulate both data and behavior. It extends the capabilities of traditional DBMS to handle complex data types and relationships. Examples include ObjectDB and Versant.

Hierarchical DBMS:

This type organizes data in a tree-like structure, where each record has a parent-child relationship. It is suitable for representing one-to-many relationships. IBM’s Information Management System (IMS) is an example of a hierarchical DBMS.

Network DBMS:

Network DBMS is similar to hierarchical DBMS but allows for more complex relationships by using a graph-like structure. It facilitates many-to-many relationships between records. Integrated Data Store (IDS) and Integrated Database Management System (IDMS) are examples of network DBMS.

Object-Relational DBMS (ORDBMS):

ORDBMS combines features of RDBMS and OODBMS. It extends the relational model to support object-oriented concepts, such as inheritance and encapsulation. PostgreSQL and Oracle Database offer object-relational capabilities.

NoSQL DBMS:

NoSQL (Not Only SQL) DBMS is designed to handle unstructured and semi-structured data, providing high scalability and flexibility. It deviates from the traditional relational model and focuses on key-value pairs, document-oriented, columnar, or graph-based data models. Examples include MongoDB, Cassandra, Couchbase, and Neo4j.

In-Memory DBMS:

In-Memory DBMS stores data primarily in main memory, offering fast data access and processing. It is optimized for high-performance applications that require real-time data processing. Examples include SAP HANA, Oracle TimesTen, and MemSQL.

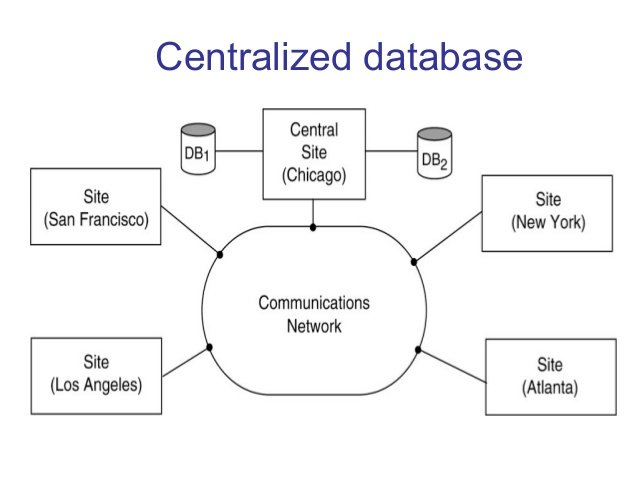

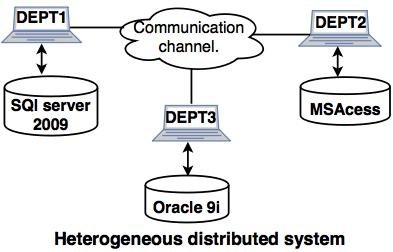

Distributed DBMS:

Distributed DBMS manages data stored on multiple interconnected computers or servers. It provides transparency and coordination across the distributed environment, allowing users to access and manipulate data as if it were stored in a single location. Apache Hadoop, Google Bigtable, and CockroachDB are examples of distributed DBMS.

Advantages of DBMS

Data Centralization:

DBMS allows for the centralized storage of data in a structured manner. This eliminates data redundancy and ensures data consistency. Users can access and manipulate data from a single source, promoting data integrity and reducing data inconsistency.

Data Sharing and Accessibility:

DBMS enables multiple users to access and share data concurrently. It provides mechanisms for user authentication, authorization, and concurrency control, ensuring that users can access the data they need while preventing unauthorized access or data conflicts.

Data Consistency and Integrity:

DBMS enforces data integrity constraints, such as primary key, foreign key, and data type constraints. This ensures that data entered into the database is accurate and consistent. DBMS also provides transaction management to maintain the atomicity, consistency, isolation, and durability (ACID) properties of data.

Data Security:

DBMS offers robust security features to protect sensitive data. It provides user authentication and authorization mechanisms to control access to the data. DBMS allows administrators to define access privileges at the level of tables, views, or individual data items. Encryption, backup, and recovery mechanisms further enhance data security.

Data Independence and Flexibility:

DBMS provides logical and physical data independence. This means that changes to the database schema or physical storage structure can be made without affecting the applications that use the data. It offers flexibility in modifying and adapting the database as the requirements evolve.

Disadvantage of DBMS

Complexity and Cost:

Implementing and maintaining a DBMS can be complex and expensive. It requires specialized skills and expertise to design, deploy, and manage a database system. Organizations may need to invest in hardware, software licenses, and personnel training. The initial setup and ongoing maintenance costs can be significant.

Performance Overhead:

DBMS introduces performance overhead compared to direct file-based data management. The additional layers of abstraction and query processing can impact performance, especially for complex queries or large-scale data processing. Tuning and optimizing the DBMS configuration and queries are necessary to achieve optimal performance.

Single Point of Failure:

A DBMS can become a single point of failure for an entire system. If the DBMS experiences a failure or downtime, it can disrupt access to critical data and impact business operations. Implementing backup and recovery mechanisms is essential to mitigate the risk of data loss and ensure system availability.

Scalability Limitations:

While DBMS systems offer scalability features, there can be limitations in scaling up to handle massive volumes of data or high transaction loads. Scaling the system to accommodate growing data and user demands may require additional hardware, configuration changes, or distributed database architectures.

Vendor Dependency:

Adopting a specific DBMS often involves vendor lock-in, as migrating from one DBMS to another can be challenging and time-consuming. Organizations may rely on specific features, tools, or proprietary extensions provided by the chosen DBMS, which can limit their flexibility and make it difficult to switch to alternative solutions.

When not to use a DBMS system?

While a Database Management System (DBMS) offers numerous benefits, there are certain scenarios where using a DBMS may not be the most suitable option. Here are a few situations when an alternative approach might be more appropriate:

Small-scale and Simple Data Storage:

If the data volume is small and the storage requirements are straightforward, using a DBMS may introduce unnecessary complexity and overhead. In such cases, a file-based system or simple data structures (e.g., flat files, spreadsheets) might be sufficient and more efficient to manage and manipulate the data.

High Performance and Real-time Processing:

In applications that require extremely high performance and real-time processing, a DBMS may not provide the necessary speed or responsiveness. Direct memory access, specialized caching mechanisms, or custom data storage approaches may be more suitable in these situations to achieve the desired performance.

Frequent Changes to Data Structure:

If the data structure undergoes frequent and unpredictable changes, using a DBMS with a fixed schema might become cumbersome. Adapting the schema and managing data migrations can be time-consuming and complex. In such cases, a NoSQL database or a flexible data storage system may offer more agility and ease of change.

Limited Resources and Cost Constraints:

Implementing and maintaining a DBMS can require significant resources, including hardware, software licenses, and skilled personnel. If the organization has limited resources or tight budget constraints, investing in a DBMS might not be feasible. Instead, simpler data management solutions or cloud-based services could be more cost-effective.

Specific Performance or Functional Requirements:

In certain niche or specialized applications, where specific performance, functionality, or data processing requirements are crucial, a custom-built data management solution or specialized data storage systems may be more suitable. These solutions can be tailored to meet the specific needs of the application and provide optimized performance for the particular use case.

Ultimately, the decision to use a DBMS or an alternative approach depends on various factors such as data size, complexity, performance requirements, scalability, flexibility, and available resources. It’s essential to carefully evaluate the specific needs and constraints of the application to determine the most appropriate data management solution.

Like this:

Like Loading...