The least square method is the process of finding the best-fitting curve or line of best fit for a set of data points by reducing the sum of the squares of the offsets (residual part) of the points from the curve. During the process of finding the relation between two variables, the trend of outcomes are estimated quantitatively. This process is termed as regression analysis. The method of curve fitting is an approach to regression analysis. This method of fitting equations which approximates the curves to given raw data is the least square.

It is quite obvious that the fitting of curves for a particular data set are not always unique. Thus, it is required to find a curve having a minimal deviation from all the measured data points. This is known as the best-fitting curve and is found by using the least-squares method.

Least Square Method

The least-squares method is a crucial statistical method that is practised to find a regression line or a best-fit line for the given pattern. This method is described by an equation with specific parameters. The method of least squares is generously used in evaluation and regression. In regression analysis, this method is said to be a standard approach for the approximation of sets of equations having more equations than the number of unknowns.

The method of least squares actually defines the solution for the minimization of the sum of squares of deviations or the errors in the result of each equation. Find the formula for sum of squares of errors, which help to find the variation in observed data.

The least-squares method is often applied in data fitting. The best fit result is assumed to reduce the sum of squared errors or residuals which are stated to be the differences between the observed or experimental value and corresponding fitted value given in the model.

There are two basic categories of least-squares problems:

- Ordinary or linear least squares

- Nonlinear least squares

These depend upon linearity or nonlinearity of the residuals. The linear problems are often seen in regression analysis in statistics. On the other hand, the non-linear problems generally used in the iterative method of refinement in which the model is approximated to the linear one with each iteration.

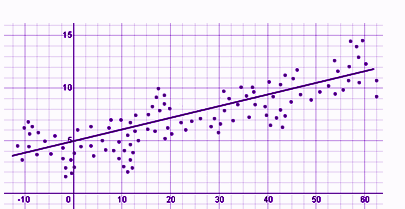

Least Square Method Graph

In linear regression, the line of best fit is a straight line as shown in the following diagram:

The given data points are to be minimized by the method of reducing residuals or offsets of each point from the line. The vertical offsets are generally used in surface, polynomial and hyperplane problems, while perpendicular offsets are utilized in common practice.

Least Square Method Formula

The least-square method states that the curve that best fits a given set of observations, is said to be a curve having a minimum sum of the squared residuals (or deviations or errors) from the given data points. Let us assume that the given points of data are (x1,y1), (x2,y2), (x3,y3), …, (xn,yn) in which all x’s are independent variables, while all y’s are dependent ones. Also, suppose that f(x) be the fitting curve and d represents error or deviation from each given point.

Now, we can write:

d1 = y1 − f(x1)

d2 = y2 − f(x2)

d3 = y3 − f(x3)

…..

dn = yn – f(xn)

The least-squares explain that the curve that best fits is represented by the property that the sum of squares of all the deviations from given values must be minimum. i.e:

Sum = Minimum Quantity

Limitations for Least-Square Method

The least-squares method is a very beneficial method of curve fitting. Despite many benefits, it has a few shortcomings too. One of the main limitations is discussed here.

In the process of regression analysis, which utilizes the least-square method for curve fitting, it is inevitably assumed that the errors in the independent variable are negligible or zero. In such cases, when independent variable errors are non-negligible, the models are subjected to measurement errors. Therefore, here, the least square method may even lead to hypothesis testing, where parameter estimates and confidence intervals are taken into consideration due to the presence of errors occurring in the independent variables.