Mccabe’s Cyclomatic Complexity: Calculate with Flow Graph (Example)

Cyclomatic Complexity in software testing is a metric used to measure the complexity of a software program quantitatively. It provides insight into the number of independent paths within the source code, indicating how complex the program’s control flow is. This metric is applicable at different levels, such as functions, modules, methods, or classes within a software program.

The calculation of Cyclomatic Complexity can be performed using control flow graphs. Control flow graphs visually represent a program as a graph composed of nodes and edges. In this context, nodes represent processing tasks, and edges represent the control flow between these tasks.

Cyclomatic Complexity is valuable for software testers and developers as it helps in identifying areas of code that may be prone to errors, challenging to understand, or require additional testing efforts. Lowering Cyclomatic Complexity is often associated with improved code maintainability and reduced risk of defects.

Key points about Cyclomatic Complexity:

-

Definition of Independent Paths:

Independent paths are those paths in the control flow graph that include at least one edge not traversed by any other paths. Each independent path represents a unique sequence of decisions and branches in the code.

-

Calculation Methods:

Cyclomatic Complexity can be calculated using different methods, including control flow graphs. The formula commonly used is:

M = E−N + 2P

Where:

M is the Cyclomatic Complexity,

E is the number of edges in the control flow graph,

N is the number of nodes in the control flow graph,

P is the number of connected components (usually 1 for a single program).

-

Control Flow Representation:

Control flow graphs provide a visual representation of how control flows through a program. Nodes represent distinct processing tasks, and edges depict the flow of control between these tasks.

-

Metric Development:

Thomas J. McCabe introduced Cyclomatic Complexity in 1976 as a metric based on the control flow representation of a program. It has since become a widely used measure for assessing the complexity of software code.

-

Graph Structure:

The structure of the control flow graph influences the Cyclomatic Complexity. Loops, conditionals, and branching statements contribute to the creation of multiple paths in the graph, increasing its complexity.

Flow graph notation for a program:

A flow graph notation is a visual representation of the control flow in a program, illustrating how the execution of the program progresses through different statements, branches, and loops. It helps in understanding the structure and logic of the code. One common notation for flow graphs includes nodes and edges, where nodes represent program statements or processing tasks, and edges represent the flow of control between these statements.

Explanation of the flow graph notation elements:

- Nodes:

Nodes in a flow graph represent individual program statements or processing tasks. Each node typically corresponds to a specific line or block of code.

- Edges:

Edges in the flow graph represent the flow of control between nodes. An edge connects two nodes and indicates the order in which the statements are executed.

-

Entry and Exit Points:

Entry and exit points are special nodes that represent the start and end of the program. The flow of control begins at the entry point and ends at the exit point.

-

Decision Nodes (Diamond Shape):

Decision nodes represent conditional statements, such as if-else conditions. They have multiple outgoing edges, each corresponding to a possible outcome of the condition.

-

Process Nodes (Rectangle Shape):

Process nodes represent sequential processing tasks or statements. They have a single incoming edge and a single outgoing edge.

-

Merge Nodes (Circle or Rounded Rectangle Shape):

Merge nodes are used to show the merging of control flow from different branches. They have multiple incoming edges and a single outgoing edge.

-

Loop Nodes (Curved Edges):

Loop nodes represent iterative structures like loops. They typically have a loop condition, and the flow of control may loop back to a previous point in the graph.

-

Connector Nodes:

Connector nodes are used to connect different parts of the flow graph, providing a way to organize and simplify complex graphs.

Example:

Consider a simple pseudocode example:

- Start

- Read input A

- Read input B

- If A > B

- Print “A is greater”

- Else

- Print “B is greater”

- End

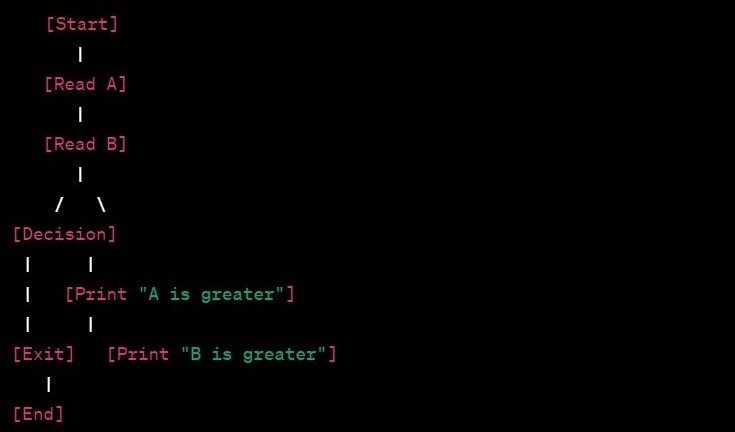

The Corresponding Flow Graph notation might look like this:

In this example, nodes represent different statements or tasks, and edges show the flow of control between them. The decision node represents the conditional statement, and the graph provides a visual representation of the program’s control flow.

Properties of Cyclomatic complexity:

-

Quantitative Measure:

Cyclomatic Complexity provides a quantitative measure of the complexity of a software program. The higher the Cyclomatic Complexity value, the more complex the program’s control flow is considered.

-

Based on Control Flow Graph:

Cyclomatic Complexity is calculated based on the control flow graph (CFG) of a program. The control flow graph visually represents the structure of the program, with nodes representing statements and edges representing the flow of control between statements.

-

Independent Paths:

Cyclomatic Complexity is related to the number of independent paths in the control flow graph. Independent paths are sequences of statements that include at least one edge not traversed by any other path.

-

Risk Indicator:

Higher Cyclomatic Complexity values are often associated with increased program risk. Programs with higher complexity may be more prone to errors, more challenging to understand, and may require more extensive testing efforts.

-

Testing Effort:

Cyclomatic Complexity is used as an indicator of the testing effort required for a program. Programs with higher complexity may require more thorough testing to ensure adequate coverage of different control flow paths.

-

Code Maintainability:

There is a correlation between Cyclomatic Complexity and code maintainability. Higher complexity can make code more challenging to maintain, understand, and modify. Reducing Cyclomatic Complexity is often associated with improving code quality.

-

Thresholds and Guidelines:

While there is no universally agreed-upon threshold for an acceptable Cyclomatic Complexity value, some guidelines suggest that values above a certain threshold may indicate potential issues. Teams may establish their own thresholds based on project requirements and industry best practices.

-

Tool Support:

Various software development tools and static analysis tools provide support for calculating Cyclomatic Complexity. These tools can automatically generate control flow graphs and calculate the complexity of code.

-

Code Refactoring:

Cyclomatic Complexity is often used as a guide for code refactoring. Reducing complexity can lead to more maintainable, readable, and less error-prone code.

How this Metric is useful for Software Testing?

-

Identifying Test Cases:

Cyclomatic Complexity helps in identifying the number of independent paths through a program. Each independent path represents a potential test case. Testing all these paths can provide comprehensive coverage and increase the likelihood of detecting defects.

-

Testing Effort Estimation:

Higher Cyclomatic Complexity values often indicate a more complex program structure, which may require more testing effort. Teams can use this metric to estimate the testing effort needed to ensure adequate coverage of different control flow paths.

-

Focus on High-Complexity Areas:

Testers can prioritize testing efforts by focusing on areas of the code with higher Cyclomatic Complexity. These areas are more likely to contain complex logic and potential sources of defects, making them important candidates for thorough testing.

-

Risk Assessment:

Cyclomatic Complexity is a useful indicator of program risk. Higher complexity may be associated with increased potential for errors. Testers can use this information to assess the risk associated with different parts of the code and allocate testing resources accordingly.

-

Path Coverage:

Cyclomatic Complexity is directly related to the number of paths through a program. Testing each independent path contributes to path coverage, helping to ensure that various execution scenarios are considered during testing.

-

Code Maintainability:

High Cyclomatic Complexity can make code more challenging to maintain. Testing can help identify potential issues in complex code early in the development process, facilitating code reviews and refactoring efforts to improve maintainability.

-

Test Case Design:

Cyclomatic Complexity supports test case design by guiding the creation of test scenarios that cover different decision points and branches in the code. It helps ensure that tests are designed to exercise various logical conditions and combinations.

-

Quality Improvement:

Regularly monitoring Cyclomatic Complexity and addressing high-complexity areas can contribute to overall code quality. By identifying and testing complex code segments, teams can reduce the likelihood of defects and improve the reliability of the software.

-

Integration Testing:

In integration testing, where interactions between different components are tested, Cyclomatic Complexity can guide the selection of test cases to ensure thorough coverage of integrated paths and potential integration points.

-

Regression Testing:

When changes are made to the codebase, testers can use Cyclomatic Complexity to assess the impact of those changes on different control flow paths. This information aids in designing effective regression test suites.

Uses of Cyclomatic Complexity:

-

Code Quality Assessment:

Cyclomatic Complexity provides a quantitative measure of code complexity. It helps assess the overall quality of the codebase, with higher values indicating more complex and potentially harder-to-understand code.

-

Defect Prediction:

High Cyclomatic Complexity is often associated with an increased likelihood of defects. Teams can use this metric as an indicator to predict areas of the code that may have a higher risk of containing defects.

-

Code Review and Refactoring:

Cyclomatic Complexity is a valuable tool during code reviews. High values can highlight areas for potential improvement. Developers can target high-complexity code segments for refactoring to enhance code readability and maintainability.

-

Test Case Design:

Cyclomatic Complexity helps in designing test cases by identifying the number of independent paths through the code. Testers can use this information to ensure comprehensive test coverage, especially in areas with complex decision logic.

-

Testing Effort Estimation:

Teams can use Cyclomatic Complexity to estimate the testing effort required for a program. Higher complexity values may suggest the need for more extensive testing to cover various control flow paths adequately.

-

Resource Allocation:

Cyclomatic Complexity assists in allocating development and testing resources effectively. Teams can prioritize efforts based on the complexity of different code segments, focusing more attention on high-complexity areas.

-

Code Maintainability:

As Cyclomatic Complexity correlates with code readability and maintainability, developers and teams can use this metric to identify areas in the code that may benefit from refactoring or improvement to enhance long-term maintainability.

-

Guidance for Code Reviews:

During code reviews, Cyclomatic Complexity values can guide reviewers to pay special attention to high-complexity areas. It serves as a flag for potential issues that require thorough examination.

-

Project Management:

Project managers can use Cyclomatic Complexity to assess the overall complexity of a software project. This information aids in project planning, risk management, and resource allocation.

- Benchmarking:

Teams can use Cyclomatic Complexity as a benchmarking metric to compare different versions of a program or to assess the complexity of codebases in different projects. This can provide insights into code evolution and help set quality standards.

-

Continuous Improvement:

Cyclomatic Complexity can be used as part of a continuous improvement process. Regularly monitoring and addressing high-complexity areas contribute to ongoing efforts to enhance code quality and maintainability.

-

Tool Integration:

Many software development tools and integrated development environments (IDEs) provide support for calculating Cyclomatic Complexity. Developers can integrate this metric into their development workflow for real-time feedback.

Disclaimer: This article is provided for informational purposes only, based on publicly available knowledge. It is not a substitute for professional advice, consultation, or medical treatment. Readers are strongly advised to seek guidance from qualified professionals, advisors, or healthcare practitioners for any specific concerns or conditions. The content on intactone.com is presented as general information and is provided “as is,” without any warranties or guarantees. Users assume all risks associated with its use, and we disclaim any liability for any damages that may occur as a result.