Evaluating predictive models is a critical step in the machine learning workflow. It involves assessing the performance, generalization capabilities, and reliability of a model on unseen data. Model evaluation is essential for making informed decisions about deploying a model in real-world applications.

Evaluating predictive models is a nuanced and iterative process crucial for building reliable and effective machine learning systems. The choice of evaluation metrics depends on the nature of the problem, the type of model, and the specific goals of the application. Whether working on classification or regression tasks, understanding the strengths and limitations of various evaluation techniques is essential for making informed decisions about model deployment. Regularly revisiting and refining the evaluation process contributes to the ongoing improvement of machine learning models, ensuring they perform well on new and unseen data in real-world scenarios.

Classification Model Evaluation:

-

Confusion Matrix:

A confusion matrix is a fundamental tool for evaluating the performance of a classification model. It provides a tabulation of the true positive (TP), true negative (TN), false positive (FP), and false negative (FN) predictions.

- Accuracy: The proportion of correctly classified instances:

Accuracy= TP+TN / TP+TN+FP+FN

- Precision: The proportion of true positives among all predicted positives:

Precision= TP / TP+FP

- Recall (Sensitivity or True Positive Rate): The proportion of true positives among all actual positives:

Recall= TP / TP+FN

- F1 Score: The harmonic mean of precision and recall:

F1= Precision ×Recall / Precision + Recall2×

- ROC Curve and AUC:

Receiver Operating Characteristic (ROC) curves is used to visualize the trade-off between true positive rate (sensitivity) and false positive rate (1-specificity) at various classification thresholds. The Area under the Curve (AUC) summarizes the performance of the model across different thresholds.

- AUC values close to 1 indicate excellent model performance, while AUC close to 0.5 suggests poor performance.

-

Precision-Recall Curve:

The precision-recall curve is especially useful when dealing with imbalanced datasets. It illustrates the trade-off between precision and recall at different classification thresholds.

- Average Precision (AP): The area under the precision-recall curve provides a single score summarizing the model’s performance across various thresholds.

-

Cross-Validation:

Cross-validation is a technique for assessing a model’s performance by dividing the dataset into multiple subsets (folds). The model is trained on some folds and tested on the remaining fold, repeating the process multiple times. Common methods include k-fold cross-validation and stratified k-fold cross-validation.

- Cross-validation helps ensure that the model’s performance is representative across different subsets of the data.

-

Classification Report:

The classification report provides a comprehensive summary of various evaluation metrics, including precision, recall, F1 score, and support (the number of actual occurrences of each class).

- It is particularly useful when dealing with multi-class classification problems.

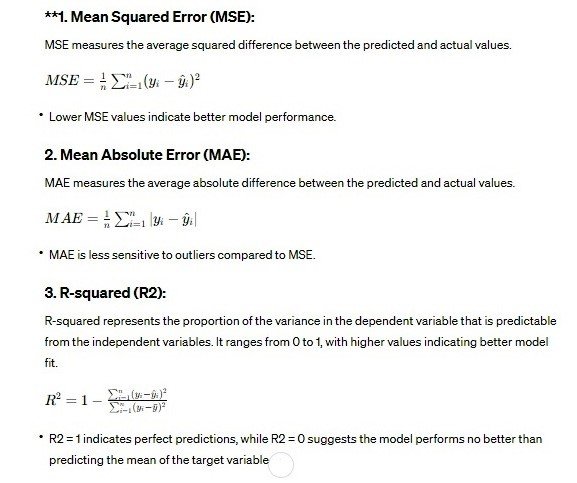

Regression Model Evaluation:

-

Residual Analysis:

Residual analysis involves examining the distribution of residuals (the differences between actual and predicted values). Key components include:

- Residual Plot: A scatter plot of residuals against predicted values helps identify patterns or heteroscedasticity.

- Normality Tests: Assessing whether residuals follow a normal distribution using statistical tests.

- Homoscedasticity Tests: Checking for consistent variance in residuals.

-

Cross-Validation:

Similar to classification, cross-validation is valuable for regression models. Techniques like k-fold cross-validation or leave-one-out cross-validation provide a more robust estimate of a model’s generalization performance.

Cross-validation helps prevent overfitting to the training data and provides insights into how the model performs on unseen data.

Common Considerations for Model Evaluation:

- Overfitting and Underfitting:

- Overfitting: Occurs when a model performs well on the training data but poorly on unseen data. Regularization techniques, cross-validation, and monitoring training/validation performance can help mitigate overfitting.

- Underfitting: Occurs when a model is too simplistic to capture the underlying patterns in the data. Increasing model complexity or using more advanced algorithms may be necessary.

- Bias-Variance Tradeoff:

- Bias: Error introduced by approximating a real-world problem, leading to the model missing relevant relationships. High bias can result in underfitting.

- Variance: Error introduced by too much complexity, leading to the model being overly sensitive to noise in the training data. High variance can result in overfitting.

Finding an appropriate balance between bias and variance is crucial for model generalization.

- Hyperparameter Tuning:

Hyperparameter tuning involves adjusting the settings of a model to optimize its performance. Techniques include grid search, random search, and more advanced optimization algorithms.

- Feature Importance:

Understanding feature importance helps identify which features contribute most to a model’s predictions. Techniques like permutation importance or model-specific feature importance methods (e.g., tree-based models’ feature importance) can be applied.

- Model Robustness:

Robust models perform well across different subsets of the data and under various conditions. Ensuring a model is robust requires testing its performance on diverse datasets and in scenarios it may encounter in the real world.